Reading Time: 4 Minutes

What is Cross Prompt Injection Attack?

Cross Prompt Injection Attack (XPIA) is an emerging threat targeting generative AI systems such as copilots and content generation tools. These systems, which interact with both user inputs and external data, are particularly vulnerable to manipulation.

XPIA exploits scenarios where AI processes content from untrusted third-party sources like web pages, emails, or documents. Within this content, attackers embed malicious instructions that appear legitimate to the AI, causing it to misinterpret and act on them.

This can result in unintended behaviors or even full hijacking of the AI session. The attack highlights a critical vulnerability in how AI systems handle unfiltered or unsanitised external data, emphasizing the need for robust safeguards when integrating third-party content.

How Does XPIA Work?

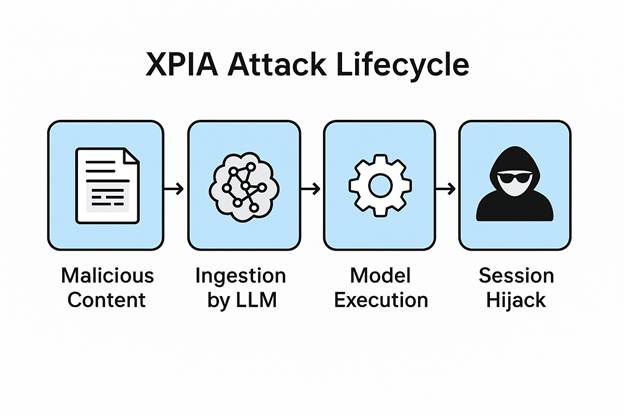

XPIA typically unfolds in three phases:

- Malicious Content Creation

Adversaries embed prompt-like instructions inside documents, web pages, or other content sources. - Ingestion by LLMs

A generative AI model processes this external content as part of its input, treating the malicious text as contextually valid. - Execution and Hijack

The AI interprets and acts on the malicious content—possibly revealing sensitive data or changing its behavior unexpectedly.

Root Vulnerability: LLMs are context-dependent and often over-trust structured input, making them vulnerable to content-based attacks.

XIPA Attack vector

XPIA can occur across various vectors:

- Emails – Hidden prompts in the body or metadata

- Plugins & Extensions – Embedded logic in add-ons

- Web Pages – Malicious scripts or structured data

- Documents – Hidden text or comments

- Images (Multi-modal) – Invisible prompt tokens embedded in visuals

XPIA vs UPIA: Understanding the Key Differences

XPIA (Cross-Prompt Injection Attack)

- Attacker: Third-party adversary

- Entry Point: External, untrusted content (e.g., web pages, documents, images)

- Description: XPIA exploits AI systems by injecting malicious instructions through content the AI processes from outside sources. These attacks are harder to detect and can hijack the AI’s behavior unknowingly.

UPIA (User Prompt Injection Attack)

- Attacker: End-user

- Entry Point: User-supplied prompt or input

- Description: UPIA involves a user crafting a prompt to manipulate the AI’s response. It’s a direct form of injection where the attacker interacts with the AI system intentionally to produce unexpected results.

Why does it happen?

There are several reasons why XIPA vulnerabilities occur, primarily rooted in the inherent design and operational challenges of large language models (LLMs). One major factor is the complexity of LLM pipelines, which involve intricate layers of data processing and model behaviors that are difficult to fully control or audit.

Another contributor is the presence of multi-modal inputs, where text, images, and metadata are combined—creating new attack surfaces due to the varied nature of data types.

The misuse of pattern recognition capabilities can also be exploited, allowing adversaries to craft inputs that manipulate model outputs in unintended ways.

Lastly, the lack of input sanitization for untrusted or user-generated content allows harmful or malicious instructions to be processed without proper filtering, further increasing the risk of exploitation.

Type of XIPA

- Embedding malicious instructions in Text

- Extracting and exploiting internal functions of an LLM

- Embedding malicious instructions in Images

- Showing harmful content in Multi-modal LLMs

XIPA attacks can manifest in various forms, each exploiting the vulnerabilities of large language models (LLMs) in unique ways. One such type involves embedding malicious instructions directly into text inputs, which can deceive the model into executing unintended actions.

Another method targets the internal mechanisms of the LLM itself, extracting and exploiting its underlying functions for unauthorized purposes. Visual data isn’t immune either—malicious commands can be subtly embedded in images, leveraging the model’s ability to interpret visual content.

Lastly, attackers may exploit multi-modal LLMs by injecting harmful content across different input types, such as combining text and image prompts, to trigger undesired or dangerous outputs. These types of XIPA attacks highlight the need for robust defenses in the development and deployment of AI systems.

How to Mitigate XPIA

To effectively mitigate XIPA attacks, several strategies have been developed.

- One such approach is the use of Purview, which enables policy-based text generation to ensure outputs align with organizational standards and compliance needs.

- Prompt Shields are another critical layer of defense, designed to detect and filter out malicious or manipulative inputs before they can influence the model’s behavior.

- Additionally, implementing Access Control measures helps limit the permissions of users and systems interacting with the model, reducing the risk of unauthorized or harmful actions.

- Finally, the ability to Constrain Model Behavior through system prompts plays a key role in guiding the model’s responses within predefined boundaries, further enhancing safety and reliability.

Together, these mitigation techniques form a robust framework for defending against potential XIPA threats.

Share your thoughts in the comment section and give your reactions for this post. Subscribe to sapiencespace for regular updates.

Click here to more such insightful content.

Reference: https://learn.microsoft.com/en-us/azure/ai-services/content-safety/concepts/jailbreak-detection

title image credits: unsplash content creators